Test AI on YOUR Website in 60 Seconds

See how our AI instantly analyzes your website and creates a personalized chatbot - without registration. Just enter your URL and watch it work!

1. Introduction: The Growing Challenge of Misinformation on Social Media

The rise of artificial intelligence (AI) has brought hope for combating misinformation more effectively. With the ability to analyze vast amounts of data, detect patterns, and cross-reference facts at scale, AI is transforming how we approach fact-checking on social media. In this blog, we will explore how AI is being used to tackle misinformation, the challenges it faces, and the future of fact-checking in the age of automation.

2. Understanding Misinformation and Its Impact

Political Influence: False information can influence voters, distort political debates, and even sway elections. Misinformation about candidates, policies, or voting procedures can lead to a lack of trust in democratic processes.

Health and Safety Risks: Misinformation related to health—especially during events like the COVID-19 pandemic—can have life-threatening consequences. False claims about treatments or vaccines can lead to widespread public confusion and unsafe behavior.

Erosion of Trust: Constant exposure to misleading or false information can erode trust in social media platforms, news outlets, and even governments, leading to societal divisions and skepticism.

3. How AI is Helping to Combat Misinformation

Automated Fact-Checking: AI can automate the process of fact-checking by comparing social media content to reliable databases and sources of truth. AI algorithms are trained to identify inconsistencies, check the accuracy of claims, and flag content that requires further verification.

Contextual Analysis: AI’s ability to analyze not just the words but also the context in which they are used is crucial for detecting misinformation. For example, AI can recognize misleading headlines, clickbait, or sensationalized narratives that are often used to spread false information.

Real-Time Monitoring: AI tools can monitor social media feeds and flag suspicious or harmful content as it appears. This enables quicker responses to emerging misinformation, reducing the time it has to spread.

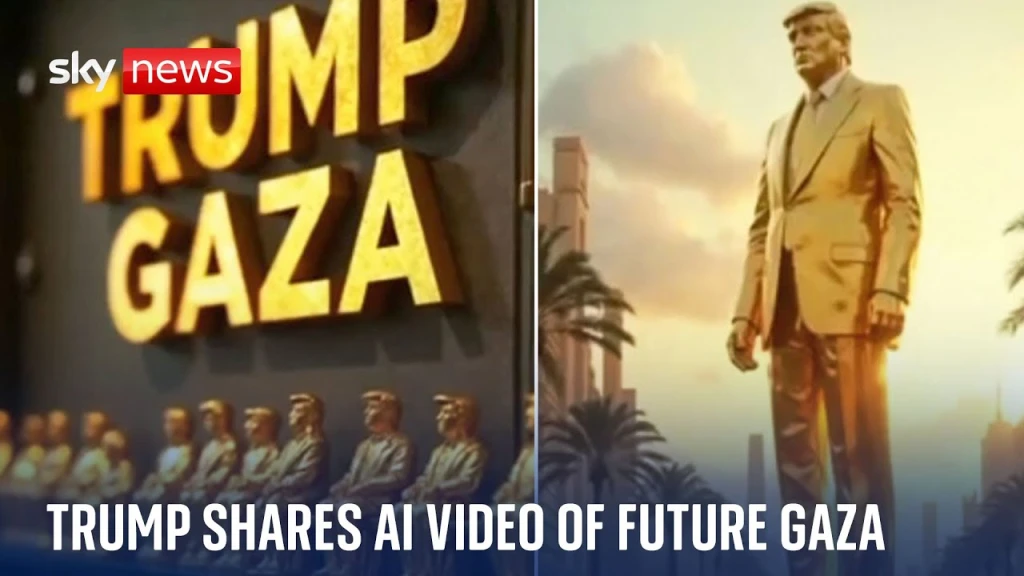

Image and Video Verification: AI is also adept at analyzing images and videos for signs of manipulation or fabrication. AI tools can detect altered images, deepfakes, and manipulated videos, providing a layer of protection against visual misinformation.

4. The Role of AI in Enhancing Social Media Platforms' Misinformation Policies

Detection and Reporting: AI can scan content for false claims and immediately notify platform moderators. This system can identify problematic content much faster than human moderators, who are often overwhelmed by the sheer volume of posts.

User Education: Some social media platforms have begun using AI to provide users with educational pop-ups or alternative perspectives when they encounter potentially misleading information. This helps to mitigate the spread of false content by prompting users to reconsider before sharing it.

Transparency and Accountability: AI tools can also track the origins of misinformation, helping platforms to better understand how and where misleading content originates. This information is essential for improving accountability and preventing the further spread of falsehoods.

Customizable Misinformation Filters: AI can be used to create customized misinformation filters based on a user’s preferences and browsing habits. This allows social media platforms to tailor misinformation monitoring to specific communities or user groups.

5. Challenges AI Faces in Combating Misinformation

Bias in AI Algorithms: AI algorithms are only as good as the data they are trained on. If an AI system is trained on biased or incomplete data, it may struggle to identify misinformation accurately. This can result in certain false claims being overlooked or, conversely, legitimate content being wrongly flagged as false.

Sophisticated Misinformation Tactics: Misinformation creators are constantly evolving their strategies to avoid detection. Techniques like using coded language, memes, and subtle disinformation can be difficult for AI to catch.

Context and Nuance: AI may struggle with understanding the full context of certain content, particularly in highly nuanced situations. Humor, satire, or complex political discourse can be difficult for AI to process accurately, leading to false positives or missed falsehoods.

Over-reliance on AI: While AI is a powerful tool, it’s important to remember that it cannot replace human judgment entirely. The complexity of human language and the evolving nature of misinformation require a balanced approach that incorporates human oversight alongside AI.

Test AI on YOUR Website in 60 Seconds

See how our AI instantly analyzes your website and creates a personalized chatbot - without registration. Just enter your URL and watch it work!

6. AI and Human Collaboration: The Ideal Approach

AI as a First Line of Defense: AI can be used as an initial filter, scanning content for potential misinformation and flagging it for human review. This significantly reduces the workload of human moderators and allows them to focus on more complex cases.

Expert Fact-Checkers: Human fact-checkers, particularly those with expertise in specific fields, can step in to evaluate and provide more context when AI detects potential misinformation. This combination ensures that complex and nuanced cases are handled with care and accuracy.

Training AI Models with Diverse Data: Human input is crucial in improving AI models. By continually updating and diversifying the datasets used to train AI, developers can make AI systems more adept at identifying misinformation across different languages, cultures, and contexts.

7. The Future of AI in Misinformation Detection

Improved Language Models: AI is becoming increasingly adept at understanding context, tone, and nuance in human language. This will make AI better at identifying misinformation in a wider range of formats, including sarcasm, humor, and indirect falsehoods.

Collaborative AI Networks: In the future, AI systems may be able to share information and learn from one another, creating a collaborative network that can more effectively detect misinformation across multiple platforms and sources.

Enhanced Detection of Deepfakes and Synthetic Media: As the technology for creating deepfakes becomes more advanced, AI tools will improve their ability to detect manipulated images, videos, and audio files, making it harder for fake content to spread undetected.

AI-Powered Policy Development: AI could also play a role in the development of better policies for handling misinformation. By analyzing patterns in misinformation, AI could help social media companies create more targeted and effective strategies for preventing false claims from going viral.

8. Conclusion: Leveraging AI to Restore Trust in Social Media

While challenges remain, AI offers a promising tool in the fight against fake news and misleading content. As AI technology continues to evolve, we can expect even more innovative ways to ensure that the information we encounter online is accurate, trustworthy, and valuable.